Intro

As generative engines reshape online discovery, citation accuracy has become one of the most important — and most fragile — components of brand visibility. Unlike traditional SEO, AI systems can:

-

misattribute your content

-

cite competitors in place of you

-

omit your brand entirely

-

pull outdated references

-

mix up similar entities

-

generate fabricated citations

-

link to low-authority or incorrect sources

These errors can spread quickly across models and influence millions of users.

Fortunately, every major generative engine now offers processes for requesting corrections — but each system works differently, and success requires strategic preparation.

This article provides a complete GEO-focused guide to requesting citation corrections across generative engines, including:

-

how AI citation errors happen

-

how to diagnose the problem

-

how to prepare correction evidence

-

where to submit correction requests

-

how to increase acceptance probability

-

how to prevent future misattribution

This is your definitive playbook for maintaining accurate representation in AI-generated summaries.

Part 1: Why Citation Errors Happen in Generative Engines

AI models may cite the wrong source because of:

1. Incomplete or conflicting metadata

Engines guess using:

-

schema

-

OpenGraph data

-

page titles

-

canonical URLs

Missing or mismatched data causes misattribution.

2. Entity confusion

Engines mix up:

-

similar brand names

-

product variants

-

founders with the same name

-

overlapping categories

This is the most common cause of incorrect citations.

3. Outdated training data

Models rely on information from years prior. If your brand changed:

-

domain

-

ownership

-

product names

-

positioning

citations may be based on historical data.

4. Weak entity authority

If the engine is unsure who you are, it chooses a safer, more authoritative entity.

5. Insufficient reference signals

Engines prioritize:

-

strong backlinks

-

authoritative mentions

-

high-quality structured data

Without these, they infer incorrectly.

6. Retrieval errors

During real-time retrieval, the engine may:

-

pull the wrong page

-

misinterpret context

-

mix sources during synthesis

Understanding the root cause helps determine the type of correction needed.

Part 2: Types of Citation Issues You Can Correct

Not all citation errors are equal. Here are the types you can — and cannot — fix.

Fixable Errors

1. Wrong source cited

Engine attributes content to the wrong website.

2. Your brand omitted from citations

You provide the information AI summarized, but it cites others.

3. Outdated attribution

Engine cites older versions of your content.

4. Partial misattribution

Some lines are attributed correctly, others incorrectly.

5. Mixed-source attribution

A competitor is cited alongside your content for your own material.

6. Fabricated citation using your brand

AI invents a URL that doesn’t exist.

Harder (But Still Addressable) Errors

7. Citation based on training data

Harder to fix, but corrections can influence retrieval-based systems.

8. Systemic entity confusion

Requires strong entity cleanup across the web.

Not Fixable (Today)

9. Training corpus deletions

You cannot currently force deletion from past training sets, but you can correct future retrieval behavior.

10. AI paraphrase reuse without explicit citation

Engines are not legally required to cite paraphrased content.

The focus of GEO correction workflows is on the fixable citation errors.

Part 3: Before Requesting Corrections — Prepare Your Evidence

AI engines respond better when your documentation is:

-

factual

-

concise

-

authoritative

-

stable

-

consistent

Prepare the following:

1. The Incorrect Citation

Include:

-

screenshot

-

exact text of the AI answer

-

the incorrect source it cited

-

timestamp and engine version (if visible)

2. The Correct Source

Link to the:

-

canonical page

-

publication date

-

author

-

evidence showing your ownership

Engines require proof of correctness.

3. Supporting Metadata

Provide:

-

structured data

-

canonical URL

-

schema screenshots

-

OpenGraph tags

-

page titles

-

Knowledge Panel evidence (if relevant)

Metadata consistency increases correction success.

4. Third-Party Validation

Attach:

-

press mentions

-

authoritative backlinks

-

citations from high-trust sources

-

public references confirming your identity

This strengthens your authority signal.

5. Clear, polite, factual explanation

Avoid emotion — engines prioritize clarity and correctness.

Once evidence is assembled, you’re ready to submit.

Part 4: How to Request Corrections — Engine by Engine

Each generative engine has a different correction workflow.

Below are the 2025 procedures.

Google SGE (Search Generative Experience)

Correction Path:

-

Open the answer panel

-

Click “Feedback” (flag icon)

-

Select “Incorrect citation”

-

Submit:

-

correct URL

-

explanation

-

screenshot

-

structured data overview

-

updated facts page link

-

Tip:

Google responds fastest when the correction aligns with your Knowledge Panel + Wikidata identity.

Bing Copilot (AI Search)

Correction Path:

-

Hover highlight over the citation

-

Click “Report issue”

-

Provide:

-

correct source

-

evidence

-

context

-

canonical branding doc

-

Tip:

Copilot uses Bing’s index heavily — update Bing Webmaster Tools before submitting.

Perplexity

Correction Path:

-

Scroll to sources

-

Click feedback icon

-

Choose “Wrong source” or “Missing source”

-

Provide correct link and supporting docs

Tip:

Perplexity has the fastest correction cycle — often under 72 hours.

ChatGPT Search / Browse Mode

Correction Path:

-

Click “Report result”

-

Choose “Incorrect citation”

-

Submit:

-

screenshot

-

correct URL

-

metadata proof

-

Tip:

GPT systems weigh canonical URLs heavily — ensure they’re perfect.

Claude.ai (Anthropic)

Correction Path:

-

Select flagged text

-

Click “Report issue”

-

Provide evidence

Tip:

Claude prioritizes ethical sourcing — provide clear provenance evidence.

Brave Summaries

Correction Path:

-

Submit correction via Brave Support

-

Include source URL and evidence

Tip:

Brave favors open data sources (Wikidata, Wikipedia). Align your entity pages there.

You.com

Correction Path:

-

Select issue category

-

Provide correct URL

-

Attach screenshots

Tip:

More successful when entity metadata is consistent across profiles.

Part 5: How To Increase the Probability of Successful Corrections

Corrections succeed when engines are confident in your authority.

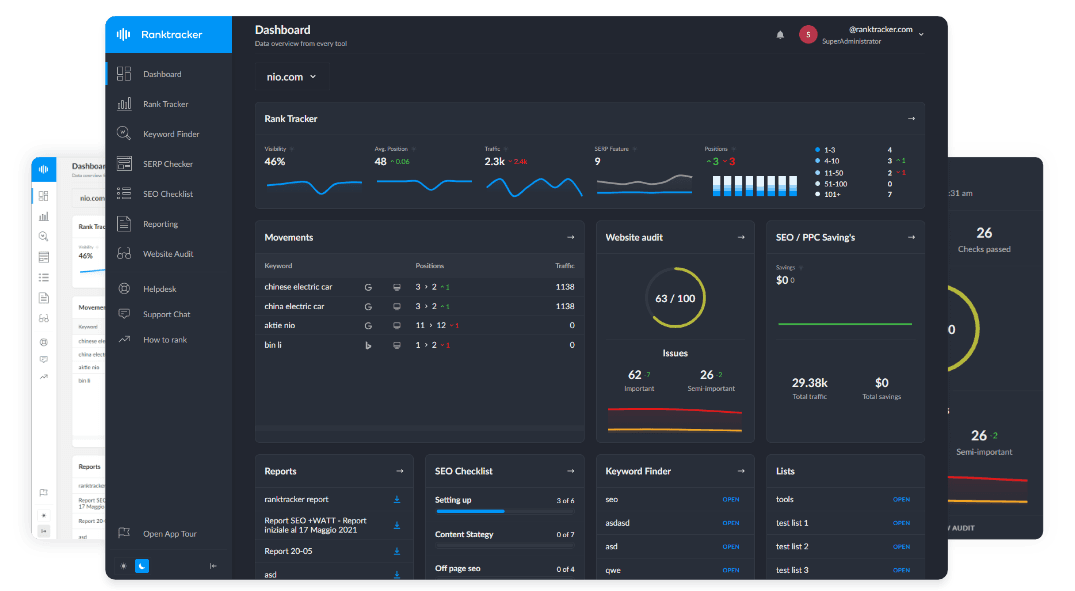

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

Here’s how to maximize acceptance.

1. Strengthen Entity Authority First

Engines trust authoritative entities.

Use Ranktracker tools to:

-

build authoritative backlinks

-

monitor your brand mentions

-

increase domain trust signals

2. Fix Your Schema Before Submitting

Engines cross-check your:

-

mainEntityOfPage -

author -

isBasedOn -

citation -

identifier -

canonical URL

Consistency = credibility.

3. Publish a Canonical Brand Facts Page

A single page stating:

-

who you are

-

what you publish

-

what you own

Engines use this as a reference.

4. Align Your External Profiles

Update:

-

LinkedIn

-

Crunchbase

-

Wikidata

-

directory listings

Engines value cross-web consistency.

5. Submit High-Quality Evidence

Provide:

-

screenshots

-

URLs

-

structured data

-

third-party validation

The clearer the evidence, the faster the correction.

6. Be Patient but Persistent

Corrections often take 2–8 weeks, depending on the engine.

Part 6: Preventing Citation Errors Before They Happen

The best correction is prevention.

Prevention Method 1: Strengthen Semantic Clarity

Make your entity unmistakable:

-

unique brand definitions

-

consistent naming conventions

-

strong schema markup

-

entity-anchored internal linking

Prevention Method 2: Publish Citation-Friendly Content

Use:

-

factual lists

-

structured explanations

-

clear attributions

-

timestamped data

AI prefers citing clean, factual content.

Prevention Method 3: Monitor Weekly

Proactively identify:

-

new citations

-

missing citations

-

competitor over-attribution

-

entity confusion patterns

Prevention Method 4: Maintain Recency

Outdated content is far more likely to be misattributed.

Prevention Method 5: Improve Structured Data Coverage

Use:

-

JSON-LD

-

Article schema

-

Organization schema

-

Product schema

-

Knowledge Graph alignment

Better structure → fewer mistakes.

Part 7: The AI Citation Correction Checklist (Copy/Paste)

Before Submitting

-

Collect screenshots

-

Identify incorrect citation

-

Provide correct source URL

-

Validate evidence

-

Gather structured metadata

-

Verify canonical URL

-

Align external profiles

-

Update Schema.org

-

Publish canonical facts page

Submission

-

Choose engine-specific correction form

-

Provide context

-

Upload screenshots

-

Quote factual evidence

-

Provide cross-web validation

-

Submit politely and concisely

After Submission

-

Monitor weekly

-

Update content freshness

-

Strengthen entity authority

-

Track new citations

-

Resend if needed after 4–6 weeks

This workflow maximizes correction success across generative engines.

Conclusion: Citation Corrections Are Now a Core GEO Skill

In generative search, your visibility depends on accurate attribution. Incorrect citations can:

-

distort your brand

-

weaken your authority

-

give competitors credit for your work

-

confuse users

-

lower GEO performance

The good news: Every major AI engine now supports correction requests — and brands that follow a structured, evidence-backed approach consistently achieve accurate corrections.

Correcting citations is no longer a support task. It is a strategic pillar of brand governance in the generative era.

The brands who master citation corrections will control how AI engines represent them — and win visibility in the answer layer of search.