Intro

N-Grams are sequential word groupings from a given text used in Natural Language Processing (NLP) for language modeling, text prediction, and information retrieval.

Types of N-Grams

N-Grams are classified based on the number of words they contain:

1. Unigrams (N=1)

- Single words in a sequence.

- Example: "SEO is important" → [SEO], [is], [important]

- Use Case: Keyword analysis, sentiment classification.

2. Bigrams (N=2)

- Two-word sequences.

- Example: "SEO is important" → [SEO is], [is important]

- Use Case: Search query optimization, phrase prediction.

3. Trigrams (N=3)

- Three-word sequences.

- Example: "SEO is important" → [SEO is important]

- Use Case: Text generation, language modeling.

4. Higher-Order N-Grams (N>3)

- Longer phrase structures.

- Example: "Best SEO practices for 2024" → [Best SEO practices for], [SEO practices for 2024]

- Use Case: Deep linguistic modeling, AI-driven text generation.

Uses of N-Grams in NLP

✅ Search Engine Optimization (SEO)

- Improves search relevance by matching long-tail queries with indexed content.

✅ Text Prediction & Auto-Suggestions

- Powers Google Autocomplete, AI chatbots, and predictive typing in search engines.

✅ Sentiment Analysis & Spam Detection

- Detects frequent patterns in positive/negative reviews or spam content.

✅ Machine Translation

- Enhances Google Translate & AI-driven localization tools.

✅ Speech Recognition

- Improves voice-to-text accuracy by recognizing common word sequences.

Best Practices for Using N-Grams

✅ Choose the Right N

- Use unigrams and bigrams for search optimization.

- Use trigrams and higher N-Grams for deeper NLP insights.

�✅ Clean & Preprocess Text Data

- Remove stopwords and irrelevant tokens for better model efficiency.

✅ Optimize for Performance

- Higher N-Grams increase complexity, requiring computational balance.

Common Mistakes to Avoid

❌ Ignoring Stopwords in Lower N-Grams

- Some stopwords (e.g., "New York") are meaningful in geographical queries.

❌ Using Excessively Long N-Grams

- High N values increase noise and reduce efficiency in NLP models.

Tools for Working with N-Grams

- NLTK & SpaCy: Python libraries for text processing.

- Google AutoML NLP: AI-powered analysis.

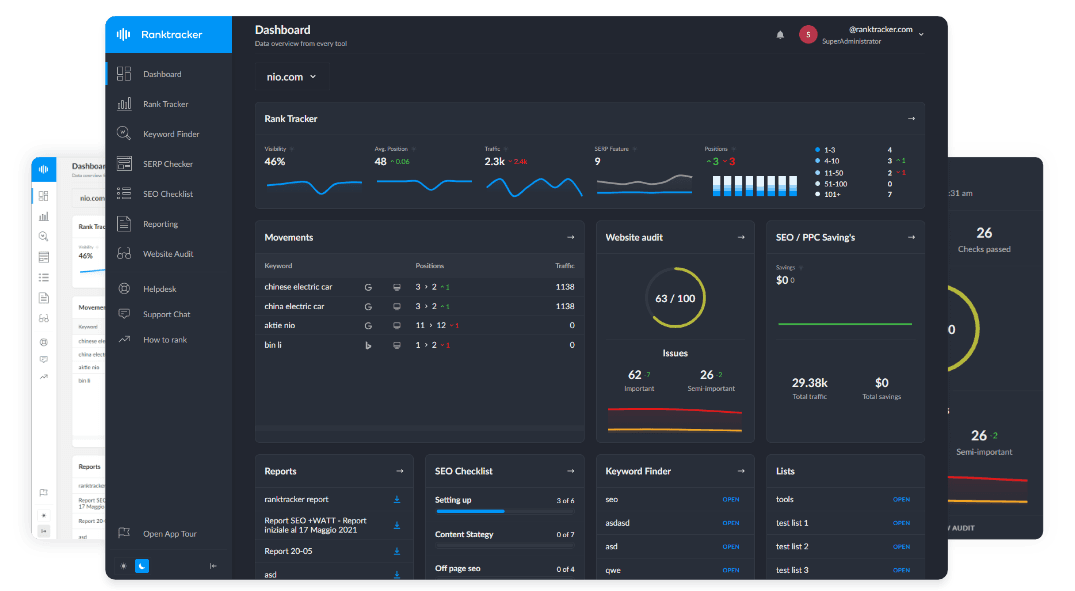

- Ranktracker’s Keyword Finder: Identifies high-ranking N-Gram phrases.

Conclusion: Leveraging N-Grams for NLP & Search Optimization

N-Grams enhance search ranking, text prediction, and AI-powered NLP applications. By implementing the right N-Gram strategy, businesses can optimize search queries, improve content relevance, and refine language modeling.