Intro

Search is no longer text-only. Generative engines now process and interpret text, images, audio, video, screenshots, charts, product photos, handwriting, UI layouts, and even workflows — all in a single query.

This new paradigm is called multi-modal generative search, and it is already rolling out across Google SGE, Bing Copilot, ChatGPT Search, Claude, Perplexity, and Apple’s upcoming On-Device AI.

Users are beginning to ask questions like:

-

“Who makes this product?” (with a photo)

-

“Summarize this PDF and compare it to that website.”

-

“Fix the code in this screenshot.”

-

“Plan a trip using this map image.”

-

“Find me the best tools based on this video demo.”

-

“Explain this chart and recommend actions.”

In 2026 and beyond, brands won’t just be optimized for text-driven queries — they will need to be understood visually, aurally, and contextually by generative AI.

This article explains how multi-modal generative search works, how engines interpret different data types, and what GEO practitioners must do to adapt.

Part 1: What Is Multi-Modal Generative Search?

Traditional search engines only processed text queries and text documents. Multi-modal generative search accepts — and correlates — multiple forms of input simultaneously, such as:

-

text

-

images

-

live video

-

screenshots

-

voice commands

-

documents

-

structured data

-

code

-

charts

-

spatial data

The engine doesn’t just retrieve matching results — it understands the content the same way a human would.

Example:

Uploaded image → analyzed → product identified → features compared → generative summary produced → best alternatives suggested.

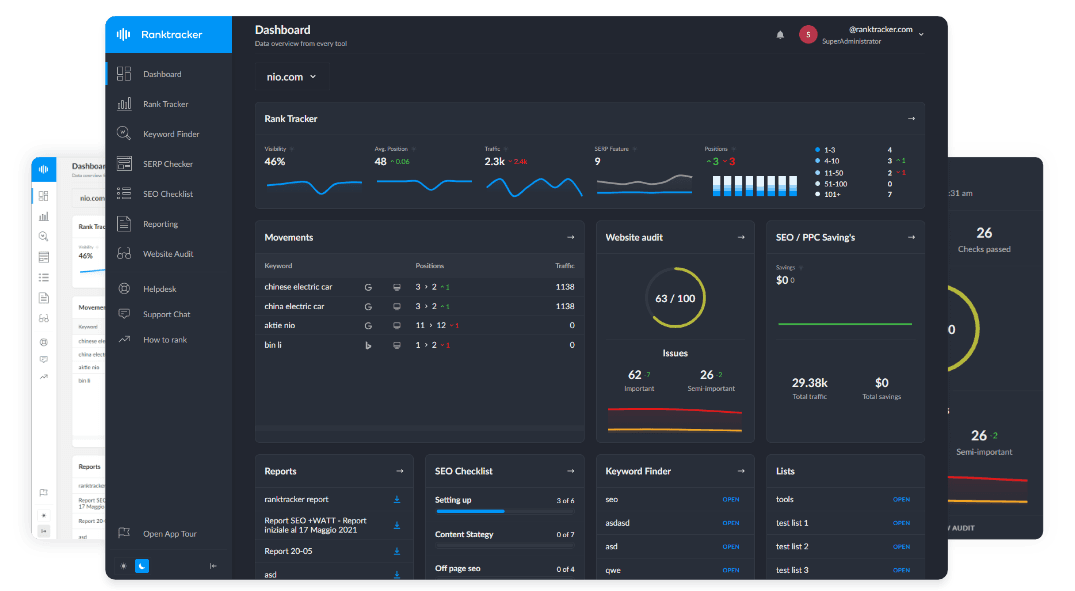

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

This is the next evolution of retrieval → reasoning → judgment.

Part 2: Why Multi-Modal Search Is Exploding Now

Three technological breakthroughs made this possible:

1. Unified Multi-Modal Model Architectures

Models like GPT-4.2, Claude 3.5, and Gemini Ultra can:

-

see

-

read

-

listen

-

interpret

-

reason

in a single pass.

2. Vision-Language Fusion

Vision and language are now processed together, not separately. This allows engines to:

-

understand relationships between text and images

-

infer concepts that are not explicitly shown

-

identify entities in visual contexts

3. On-Device and Edge AI

With Apple, Google, and Meta pushing on-device reasoning, multi-modal search becomes faster and more private — and therefore mainstream.

Multi-modal search is the new default for generative engines.

Part 3: How Multi-Modal Engines Interpret Content

When a user uploads an image, screenshot, or audio clip, engines follow a multi-stage process:

Stage 1 — Content Extraction

Identify what is in the content:

-

objects

-

brands

-

text (OCR)

-

colors

-

charts

-

logos

-

UI elements

-

faces (blurred where required)

-

scenery

-

diagrams

Stage 2 — Semantic Understanding

Interpret what it means:

-

purpose

-

category

-

relationships

-

style

-

usage context

-

emotional tone

-

functionality

Stage 3 — Entity Linking

Connect elements to known entities:

-

products

-

companies

-

locations

-

concepts

-

people

-

SKUs

Stage 4 — Judgment & Reasoning

Generate actions or insights:

-

compare this to alternatives

-

summarize what’s happening

-

extract key points

-

recommend options

-

provide instructions

-

detect errors

Multi-modal search is not retrieval — it is interpretation plus reasoning.

Part 4: How This Changes Optimization Forever

GEO must now evolve beyond text-only optimization.

Below are the transformations.

Transformation 1: Images Become Ranking Signals

Generative engines extract:

-

brand logos

-

product labels

-

packaging styles

-

room layouts

-

charts

-

UI screenshots

-

feature diagrams

This means brands must:

-

optimize product images

-

watermark visuals

-

align visuals with entity definitions

-

maintain consistent brand identity across media

Your image library becomes your ranking library.

Transformation 2: Video Becomes a First-Class Search Asset

Engines now:

-

transcribe

-

summarize

-

index

-

break down steps in tutorials

-

identify brands in frames

-

extract features from demos

By 2027, video-first GEO becomes mandatory for:

-

SaaS tools

-

e-commerce

-

education

-

home services

-

B2B explaining complex workflows

Your best videos will become your “generative answers.”

Transformation 3: Screenshots Become Search Queries

Users will increasingly search by screenshot.

A screenshot of:

-

an error message

-

a product page

-

a competitor’s feature

-

a pricing table

-

a UI flow

-

a report

triggers multi-modal understanding.

Brands must:

-

structure UI elements

-

maintain consistent visual language

-

ensure branding is legible in screenshots

Your product UI becomes searchable.

Transformation 4: Charts and Data Visuals Are Now “Queryable”

AI engines can interpret:

-

bar charts

-

line charts

-

KPI dashboards

-

heatmaps

-

analytics reports

They can infer:

-

trends

-

anomalies

-

comparisons

-

predictions

Brands need:

-

clean visuals

-

labeled axes

-

high-contrast designs

-

metadata describing each data graphic

Your analytics become machine-readable.

Transformation 5: Multi-Modal Content Requires Multi-Modal Schema

Schema.org will soon expand to include:

-

visualObject

-

audiovisualObject

-

screenshotObject

-

chartObject

Structured metadata becomes essential for:

-

product demos

-

infographics

-

UI screenshots

-

comparison tables

Engines need machine cues to understand multimedia.

Part 5: Multi-Modal Generative Engines Change Query Categories

New query types will dominate generative search.

1. “Identify This” Queries

Uploaded image → AI identifies:

-

product

-

location

-

vehicle

-

brand

-

clothing item

-

UI element

-

device

2. “Explain This” Queries

AI explains:

-

dashboards

-

charts

-

code screenshots

-

product manuals

-

flow diagrams

These require multi-modal literacy from brands.

3. “Compare These” Queries

Image or video comparison triggers:

-

product alternatives

-

pricing comparisons

-

feature differentiation

-

competitor analysis

Your brand must appear in these comparisons.

4. “Fix This” Queries

Screenshot → AI fixes:

-

code

-

spreadsheet

-

UI layout

-

document

-

settings

Brands that provide clear troubleshooting steps get cited most.

5. “Is This Good?” Queries

User shows product → AI reviews it.

Your brand reputation becomes visible beyond text.

Part 6: What Brands Must Do to Optimize for Multi-Modal AI

Here is your full optimization protocol.

Step 1: Create Multi-Modal Canonical Assets

You need:

-

canonical product images

-

canonical UI screenshots

-

canonical videos

-

annotated diagrams

-

visual feature breakdowns

Engines must see the same visuals across the web.

Step 2: Add Multi-Modal Metadata to All Assets

Use:

-

alt text

-

ARIA labeling

-

semantic descriptions

-

watermark metadata

-

structured captions

-

version tags

-

embedding-friendly filenames

These signals help models link visuals to entities.

Step 3: Ensure Visual Identity Consistency

AI engines detect inconsistencies as trust gaps.

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

Maintain consistent:

-

color palettes

-

logo placement

-

typography

-

screenshot style

-

product angles

Consistency is a ranking signal.

Step 4: Produce Multi-Modal Content Hubs

Examples:

-

video explainers

-

image-rich tutorials

-

screenshot-based guides

-

visual workflows

-

annotated product breakdowns

These become “multi-modal citations.”

Step 5: Optimize Your On-Site Media Delivery

AI engines need:

-

clean URLs

-

alt text

-

EXIF metadata

-

JSON-LD for media

-

accessible versions

-

fast CDN delivery

Poor media delivery = poor multi-modal visibility.

Step 6: Maintain Visual Provenance (C2PA)

Embed provenance into:

-

product photos

-

videos

-

PDF guides

-

infographics

This helps engines verify you as the source.

Step 7: Test Multi-Modal Prompts Weekly

Search with:

-

screenshots

-

product photos

-

charts

-

video clips

Monitor:

-

misclassification

-

missing citations

-

incorrect entity linking

Generative misinterpretation must be corrected early.

Part 7: Predicting the Next Stage of Multi-Modal GEO (2026–2030)

Here are the future shifts.

Prediction 1: Visual citations become as important as text citations

Engines will show:

-

image-source badges

-

video excerpt-credit

-

screenshot provenance tags

Prediction 2: AI will prefer brands with visual-first documentation

Step-by-step screenshots will outperform text-only tutorials.

Prediction 3: Search will operate like a personal visual assistant

Users will point their camera at something → AI handles the workflow.

Prediction 4: Multi-modal alt data will become standardized

New schema standards for:

-

diagrams

-

screenshots

-

annotated UI flows

Prediction 5: Brands will maintain “visual knowledge graphs”

Structured relationships between:

-

icons

-

screenshots

-

product photos

-

diagrams

Prediction 6: AI assistants will choose which visuals to trust

Engines will weigh:

-

provenance

-

clarity

-

consistency

-

authority

-

metadata alignment

Prediction 7: Multi-modal GEO teams emerge

Enterprises will hire:

-

visual documentation strategists

-

multi-modal metadata engineers

-

AI comprehension testers

GEO becomes multi-disciplinary.

Part 8: The Multi-Modal GEO Checklist (Copy & Paste)

Media Assets

-

Canonical product images

-

Canonical UI screenshots

-

Video demos

-

Visual diagrams

-

Annotated workflows

Metadata

-

Alt text

-

Structured captions

-

EXIF/metadata

-

JSON-LD for media

-

C2PA provenance

Identity

-

Consistent visual branding

-

Logo placement uniform

-

Standard screenshot style

-

Multi-modal entity linking

Content

-

Video-rich tutorials

-

Screenshot-based guides

-

Visual-first product documentation

-

Charts with clear labels

Monitoring

-

Weekly screenshot queries

-

Weekly image queries

-

Weekly video queries

-

Entity misclassification checks

This ensures full multi-modal readiness.

Conclusion: Multi-Modal Search Is the Next Frontier of GEO

Generative search is no longer text-driven. AI engines now:

-

see

-

understand

-

compare

-

analyze

-

reason

-

summarize

across all media formats. Brands that optimize only for text will lose visibility as multi-modal behavior becomes standard across both consumer and enterprise search interfaces.

The future belongs to brands that treat images, video, screenshots, diagrams, and voice as primary sources of truth — not supplementary assets.

Multi-modal GEO is not a trend. It is the next foundation of digital visibility.