Intro

Most marketers think of AI optimization in terms of proprietary systems like ChatGPT, Gemini, or Claude. But the real disruption is happening in the open-source LLM ecosystem, led by Meta’s LLaMA models.

LLaMA powers:

-

enterprise chatbots

-

on-device assistants

-

search systems

-

customer service agents

-

RAG-powered tools

-

internal enterprise knowledge engines

-

SaaS product copilots

-

multi-agent work automation

-

open-source recommender systems

Unlike closed models, LLaMA is everywhere — inside thousands of companies, startups, apps, and workflows.

If your brand isn’t represented in LLaMA-based models, you’re losing visibility across the entire open-source AI landscape.

This article explains how to optimize your content, data, and brand so LLaMA models can understand, retrieve, cite, and recommend you, and how to capitalize on the open-source advantage.

1. Why LLaMA Optimization Matters

Meta’s LLaMA models represent:

-

✔ the most widely deployed LLM family

-

✔ the backbone of enterprise AI infrastructure

-

✔ the foundation of nearly all open-source AI projects

-

✔ the core of local and on-device AI applications

-

✔ the model that startups fine-tune for vertical use cases

LLaMA is the Linux of AI: lightweight, modular, remixable, and ubiquitous.

This means your brand can appear in:

-

enterprise intranets

-

internal search systems

-

company-wide knowledge tools

-

AI customer assistants

-

product recommendation bots

-

private RAG databases

-

local offline AI agents

-

industry-specific fine-tuned models

Closed models influence consumers.

LLaMA influences business ecosystems.

Ignoring it would be a catastrophic mistake for brands in 2025 and beyond.

2. How LLaMA Models Learn, Retrieve, and Generate

Unlike proprietary LLMs, LLaMA models are:

-

✔ often fine-tuned by third parties

-

✔ trained on custom datasets

-

✔ integrated with local retrieval systems

-

✔ modified through LoRA adapters

-

✔ heavily augmented with external context

This creates three important optimization realities:

1. LLaMA Models Vary Widely

No two companies run the same LLaMA.

Some run LLaMA³-8B with RAG. Some run LLaMA² 70B fine-tuned for finance. Some run tiny on-device 3B models.

Optimization must target universal signals, not model-specific quirks.

2. RAG (Retrieval-Augmented Generation) Dominates

80% of LLaMA deployments use RAG pipelines.

This means:

your content must be RAG-friendly

(short, factual, structured, neutral, extractable)

3. Enterprise Context > Open Web

Companies often override default model behavior with:

-

internal documents

-

custom knowledge bases

-

private datasets

-

policy constraints

You must ensure your public-facing content allows LLaMA fine-tuners and RAG engineers to trust you enough to include your data in their systems.

3. The 5 Pillars of LLaMA Optimization (LLO)

Optimizing for LLaMA requires a different approach than ChatGPT or Gemini.

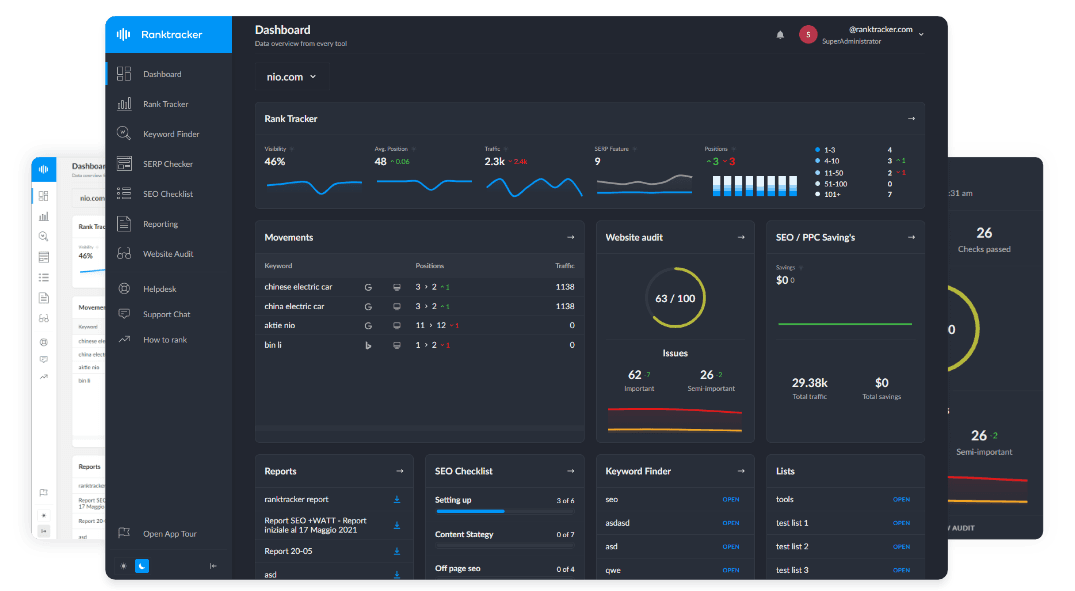

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

Here are the five pillars:

1. RAG-Ready Content

LLaMA reads retrieved text more than pretraining text.

2. Machine-Friendly Formatting

Markdown-style clarity beats dense, stylistic prose.

3. High-Fidelity Facts

Fine-tuners and enterprise users demand trustworthy data.

4. Open-Web Authority & Semantic Stability

LLaMA models cross-check data against web consensus.

5. Embedding-Friendly Information Blocks

Vector retrieval must clearly differentiate your brand.

Let’s break these down.

4. Pillar 1 — Create RAG-Ready Content

This is the single most important element of LLaMA optimization.

RAG systems prefer:

-

✔ short paragraphs

-

✔ clear definitions

-

✔ numbered lists

-

✔ bullet points

-

✔ explicit terminology

-

✔ table-like comparisons

-

✔ question-and-answer sequences

-

✔ neutral, factual tone

RAG engineers want your content because it’s:

clean → extractable → trustworthy → easy to embed

If your content is hard for RAG to interpret, your brand won’t be included in corporate AI systems.

5. Pillar 2 — Optimize for Machine Interpretability

Write for:

-

token efficiency

-

embedding clarity

-

semantic separation

-

answer-first structure

-

topical modularity

Recommended formats:

-

✔ “What is…” definitions

-

✔ “How it works…” explanations

-

✔ decision trees

-

✔ use-case workflows

-

✔ feature breakdowns

-

✔ comparison blocks

Use Ranktracker’s AI Article Writer to produce answer-first structures ideal for LLaMA ingestion.

6. Pillar 3 — Strengthen Factual Integrity

Enterprises choose content for fine-tuning based on:

-

factuality

-

consistency

-

accuracy

-

recency

-

neutrality

-

domain authority

-

safety

Your content must include:

-

✔ citations

-

✔ transparent definitions

-

✔ update logs

-

✔ versioning

-

✔ explicit disclaimers

-

✔ expert authors

-

✔ methodology notes (for data or research)

If your content lacks clarity, LLaMA-based systems won’t use it.

7. Pillar 4 — Build Open-Web Authority & Entity Strength

LLaMA is trained on large slices of:

-

Wikipedia

-

Common Crawl

-

GitHub

-

PubMed

-

ArXiv

-

open-domain web content

To appear in the model’s internal knowledge, you need:

-

✔ consistent entity definitions

-

✔ strong backlink authority

-

✔ citations in authoritative publications

-

✔ mentions in reputable directories

-

✔ participation in open-source communities

-

✔ public technical documentation

Use:

-

Backlink Checker (build authority)

-

Backlink Monitor (track citations)

-

SERP Checker (spot entity alignment)

-

Web Audit (fix ambiguity issues)

LLaMA’s open-source nature rewards open-web consensus.

8. Pillar 5 — Make Your Content Embedding-Friendly

Since LLaMA deployments rely heavily on embeddings, ensure your content works well in vector space.

Embedding-friendly pages include:

-

✔ clear topical boundaries

-

✔ unambiguous terminology

-

✔ minimal fluff

-

✔ explicit feature lists

-

✔ tightly scoped paragraphs

-

✔ predictable structure

Embedding-unfriendly pages mix:

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

❌ multiple topics

❌ vague metaphors

❌ dense storytelling

❌ excessive fluff

❌ unclear feature descriptions

9. How Brands Can Leverage Open-Source LLaMA

LLaMA gives marketers five opportunities that proprietary LLMs don’t.

Opportunity 1 — Your Content Can Be Included in Fine-Tuned Models

If you publish clean documentation, companies may embed or fine-tune your content into:

-

customer support bots

-

internal knowledge engines

-

procurement tools

-

enterprise search layers

This means: Your brand becomes part of the infrastructure of thousands of businesses.

Opportunity 2 — You Can Build Your Own Brand Model

With LLaMA, any brand can train:

-

✔ an internal LLM

-

✔ a branded assistant

-

✔ a domain-specific chatbot

-

✔ a marketing or SEO copilot

-

✔ an interactive helpdesk

Your content becomes the engine.

Opportunity 3 — You Can Influence Vertical AI Models

Startups are fine-tuning LLaMA for:

-

law

-

finance

-

healthcare

-

marketing

-

cybersecurity

-

ecommerce

-

project management

-

SaaS tools

Strong public documentation → greater inclusion.

Opportunity 4 — You Can Be Integrated Into RAG Plugins

Developers scrape:

-

docs

-

API references

-

tutorials

-

guides

-

product pages

For vector stores.

If your content is clear, developers pick your brand for inclusion.

Opportunity 5 — You Can Build Community Equity

LLaMA has a massive GitHub ecosystem.

Participating in:

-

issues

-

documentation

-

tutorials

-

open datasets

-

model adapters

-

fine-tuning recipes

Positions your brand as a leader in the open-source AI community.

10. How to Measure LLaMA Visibility

Track these six KPIs:

1. RAG Inclusion Frequency

How often your content appears in vector stores.

2. Fine-Tuning Adoption Signals

Mentions in model cards or community forks.

3. Developer Mentions

Your brand referenced in GitHub repos or npm/pip packages.

4. Model Recall Testing

Ask local LLaMA instances:

-

“What is [brand]?”

-

“Best tools for [topic]?”

-

“Alternatives to [competitor]?”

5. Embedding Quality Score

How easily embeddings retrieve your content.

6. Open-Web Entity Strength

Search result consistency.

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

Together, these form the LLaMA Visibility Score (LVS).

11. How Ranktracker Tools Support LLaMA Optimization

Ranktracker helps you become “RAG-friendly” and “open-source ready.”

Web Audit

Ensures machine readability and clarity.

Keyword Finder

Builds clusters that power embedding separability.

AI Article Writer

Creates answer-first content ideal for LLaMA retrieval.

Backlink Checker

Strengthens authority signals LLaMA trusts.

Backlink Monitor

Logs external citations used by developers.

SERP Checker

Shows entity alignment needed for model inclusion.

Final Thought:

LLaMA Is Not Just an LLM — It’s the Foundation of AI Infrastructure

Optimizing for LLaMA is optimizing for:

-

enterprise AI

-

developer ecosystems

-

open-source knowledge systems

-

RAG pipelines

-

startup copilots

-

future multimodal assistants

-

on-device intelligence

If your content is:

-

structured

-

factual

-

extractable

-

consistent

-

authoritative

-

embedding-friendly

-

RAG-optimized

-

open-web aligned

Then your brand becomes a default component in thousands of AI systems — not just a website waiting for a click.

LLaMA offers a unique opportunity:

You can become part of the global open-source AI infrastructure — if you optimize for it now.