Intro

Have you ever wondered why some websites show up higher in Google search results than others? It often comes down to how well these sites talk to Google through something called website crawling. This might sound complex, but stick with us. We’ll explain it all in simple terms, helping your site get the attention it deserves from Google.

One key fact is that Google has been making its crawling technology better. This means they can now understand and index websites more efficiently than ever before. For example, just as non Gamstop casinos use innovative strategies to enhance their online visibility, your website can also benefit from improved crawling practices. Our blog post will guide you through what this means for your technical SEO strategies. By following a few best practices, you can make sure your website stands out online.

Ready to boost your site’s rank? Keep reading!

Importance of understanding website crawling

Understanding website crawling is vital for any digital marketing strategy, especially SEO. Google uses sophisticated algorithms to crawl web pages, determining which pages get indexed and how they rank in search results.

This process directly influences organic performance and website ranking. Knowing how Google's crawling technology works allows for better optimisation of web content and technical aspects of a site.

It ensures that the content not only meets quality standards but also adheres to the principles that enhance crawlability and indexing.

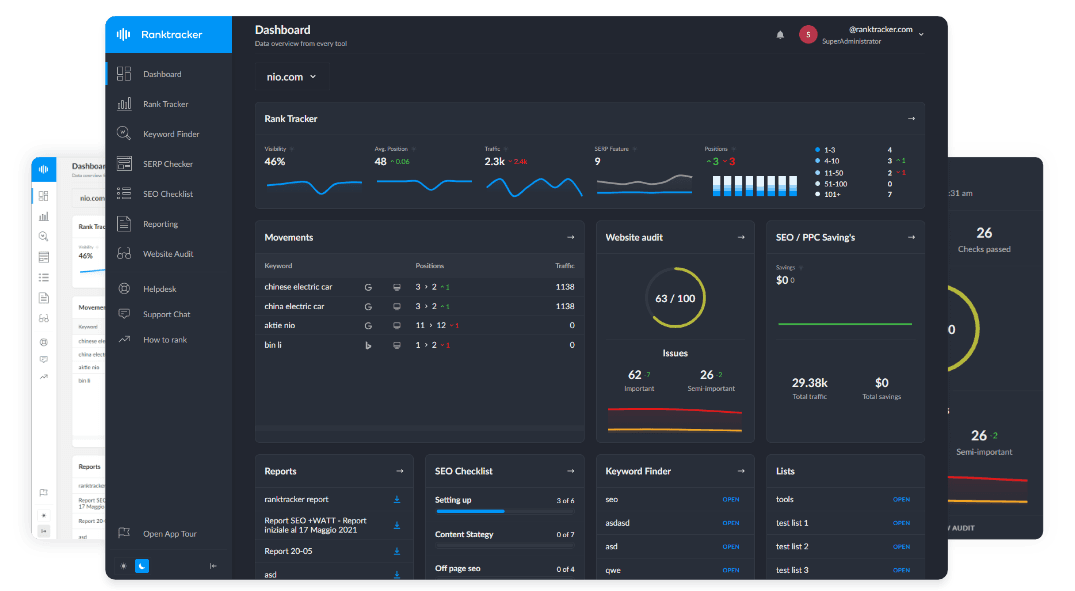

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

Google constantly refines its crawling algorithm, making it essential for website owners to stay updated with the latest advancements. As Google's new algorithm shapes how search engines crawl and index content, understanding these changes allows marketers to devise more effective technical SEO strategies.

This knowledge helps in identifying potential areas for improvement within a website's structure or content strategy, leading towards an enhanced user experience and higher search engine rankings.

Understanding these components paves the way to delve into specific details about what website crawling entails.

Understanding Website Crawling

Website crawling occurs when search engines explore the internet to gather information. Google’s algorithms determine how efficiently this process happens, affecting how well your site ranks in search results.

What is website crawling?

Website crawling refers to the process by which search engines like Google discover new and updated content on various websites. Crawlers, also known as spiders or bots, systematically browse the web.

They follow links from one page to another, collecting data along the way.

Google's crawling algorithm plays a crucial role in determining how well a site ranks in search results. It assesses factors that affect crawling and indexing, such as website optimisation and crawl-friendly structures.

A well-optimised site ensures that crawlers can access its content easily. This ultimately enhances web content interpretation and improves search rankings for businesses aiming for success in online visibility.

Google's crawling algorithm

Google's crawling algorithm plays a crucial role in website optimisation. It identifies which pages to index and how frequently to revisit them. This algorithm examines many factors, such as content relevance and site structure.

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

Search engines use it to determine the importance of each page. A well-optimised site encourages Google's bots to crawl effectively, and understanding SEO best practices can help improve your site's visibility in search results.

Understanding these mechanics helps improve technical SEO strategies. Websites that follow best practices are more likely to rank higher in search results. For instance, fast-loading pages tend to attract more attention from crawlers.

Therefore, enhancing website performance directly influences web indexing success and overall visibility online.

Impact on Technical SEO

A crawl-friendly website improves visibility in search engines. Various factors influence how well Google crawls and indexes your site, making proactive adjustments essential for success.

Importance of having a crawl-friendly website

A crawl-friendly website plays a crucial role in search engine optimisation. Google’s Enhanced Crawling Technology relies on easily accessible content. If a website is difficult to navigate, crawlers struggle to index it properly.

This results in lower rankings and decreased visibility.

Optimising URL structure significantly improves crawling efficiency. Proper HTTP status codes also guide crawlers effectively. Internal linking directs both users and crawlers through the site seamlessly.

By implementing structured data and Core Web Vitals, webmasters enhance their technical SEO strategies further, ensuring that Google’s indexing technology functions smoothly.

Factors that affect crawling and indexing

Crawling and indexing rely on several factors. First, a site's structure plays a crucial role. Search engines prefer clear, logical layouts that help them find all pages easily. Websites with nested links or complex navigation can hinder the crawling process. For websites focused on niche audiences, such as those interested in cancelling GamStop, ensuring optimal structure can also enhance their visibility and usability.

Page load speed significantly impacts how quickly search engines index content. Slow-loading pages frustrate users and bots alike, leading to missed opportunities for proper indexing. Furthermore, using accurate HTTP status codes is vital. A 404 error means the page does not exist and can prevent indexing altogether.

Content quality also matters in technical SEO strategies. Unique, informative content attracts more visitors and encourages better crawling rates. Engaging with internal linking strengthens connections between relevant pages, making it easier for Google's enhanced website crawling technology to discover more of your site's content efficiently.

Mobile friendliness is essential today as well. Google prioritises sites that perform well on mobile devices due to increasing user reliance on smartphones. HTTPS usage signals security to both users and search engines, which may boost trustworthiness during the crawling process too.

Best Practices for Technical SEO

Best practices for technical SEO focus on specific actions. Implement proper HTTP status codes and optimise your URL structure to improve visibility.

Proper HTTP status codes

Proper HTTP status codes play a crucial role in technical SEO. These codes communicate how a server responds to requests from web crawlers. A 200 status code indicates that the page loads correctly, while a 404 shows that the page does not exist.

Search engines rely on these signals for efficient crawling and indexing.

Using correct status codes helps improve website optimisation. Errors can hinder Google's crawling technology and affect your site's visibility in search results. Implementing proper HTTP responses sets the foundation for enhancing your overall technical SEO strategy.

Next, we will explore optimising URL structure for better performance.

Optimising URL structure

Proper HTTP status codes set the stage for effective website optimisation. Optimising URL structure plays a crucial role in technical SEO strategies. Clean, descriptive URLs help search engines understand your content better.

They also improve user experience by making links easier to read and remember.

Use relevant keywords within your URLs. Search engines appreciate concise and meaningful structures that reflect page content accurately. Avoid complex parameters or excessive characters in URLs.

A well-structured URL can lead to significant improvements in crawling and indexing efficiency, enhancing your site's visibility on search engine result pages.

Utilising internal linking

Internal linking boosts website optimisation. It connects various pages within your site, guiding both users and search engines. This technique helps Google’s indexing technology understand your content better.

Well-structured internal links enhance the flow of traffic and improve user experience. They allow search engine crawlers to discover new content easily. Each link you use can influence how well a page ranks in search results.

Building a strong network of internal links supports technical SEO improvements. Focus on relevant anchor text that reflects the linked page's subject matter for optimal results. Ensure that each link adds value to the reader's journey without overwhelming them with choices.

By leveraging this strategy effectively, you help shape an efficient website structure for crawling and indexing by Google's advanced web indexing techniques. Next, consider implementing structured data and Core Web Vitals to further improve your site's visibility in searches.

Implementing structured data and Core Web Vitals

Structured data helps search engines understand your content better. You can use it to highlight important information on your website. This practice enhances Google's indexing technology and improves visibility in search results. With advancements in AI, structured data is becoming even more powerful, enabling search engines to interpret content more contextually.

Core Web Vitals measure the user experience on your site, focusing on loading speed, interactivity, and visual stability. A strong performance in these areas directly impacts technical SEO strategies.

Optimising for structured data and Core Web Vitals leads to smarter search engine optimisation tactics. As Google refines its crawling algorithm, incorporating AI-driven insights makes these elements crucial for effective website optimisation. Pay attention to both aspects to stay ahead in evolving SEO practices.

Considering mobile friendliness and HTTPS usage

Mobile friendliness plays a vital role in technical SEO. Websites that are optimised for mobile devices rank higher on search engines like Google. This trend stems from the rise in mobile browsing.

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

Users want fast and responsive sites on their smartphones.

HTTPS usage is equally crucial. Secure websites build trust with visitors. They also gain favour with Google's crawling updates. Sites without HTTPS may face penalties, impacting their visibility in search results.

Prioritising both mobile optimisation and secure connections strengthens your overall SEO strategy.

Conclusion

Google's enhanced crawling technology shapes technical SEO strategies significantly. It demands that websites become more crawl-friendly. Site owners must focus on factors affecting crawling and indexing.

Efficient HTTP status codes, optimised URLs, and strong internal linking matter now more than ever. Embracing structured data and prioritising mobile friendliness will keep sites competitive in search rankings.