Intro

The term free proxy list refers to publicly available collections of proxy server addresses that anyone can access to route their internet traffic through alternate IPs. These proxies allow users to bypass content restrictions, conceal their identities, or distribute requests to avoid detection. In parallel, the best proxies for scraping are those that provide consistent uptime, high anonymity, and the ability to bypass anti-bot systems while retrieving structured or unstructured data from web sources.

When evaluating access options, many developers start with a free proxy list due to its low barrier to entry. However, choosing the right proxy for scraping purposes depends on performance, rotation logic, and reputation of the IP address pool. While a free proxy list may offer temporary solutions, scaling and reliability often require deeper analysis and infrastructure awareness.

The distinction lies in usage. While both serve as intermediaries, the best proxies for scraping are selected for durability and adaptability in data-gathering contexts. These use cases include price comparison, sentiment analysis, search engine result tracking, and e-commerce intelligence. Understanding how these proxies operate-and how lists differ in reliability-can affect success rates across various industries.

Verified Trends in Proxy Adoption and Performance

Global proxy usage continues to increase as businesses integrate automated web data into decision-making processes. According to a 2023 forecast by ResearchAndMarkets, the proxy service market is projected to surpass $2.3 billion by 2027, with web scraping tools accounting for a substantial share of usage. A key driver is the rising complexity of content delivery networks and the need to simulate genuine user behavior.

Analysis by Statista found that approximately 64% of businesses employing scraping tools encountered IP-based blocking within their first three months of operation. Those using proxies with rotating IPs and header randomization significantly extended operational continuity. In contrast, reliance on unverified proxies from a typical free proxy list led to a higher block rate, often due to overuse or association with abuse history.

A report from the Open Data Initiative in 2022 also highlighted that data collected using residential or mobile proxies had 78% higher integrity than that gathered using shared datacenter proxies. These metrics support the preference for strategic IP rotation over general access.

Furthermore, a study conducted by the International Web Research Association emphasized the increasing use of proxy management APIs that filter proxy pools based on latency, geolocation, and uptime. This signals a shift from static lists to active monitoring systems that evaluate real-world performance metrics.

Common Applications and Professional Use Cases

Organizations apply proxy technologies in diverse ways, depending on objectives. For market intelligence teams, the best proxies for scraping offer a scalable path to collect data from thousands of product pages, reviews, and regional pricing indexes. Without such proxies, companies risk being blocked or throttled mid-process, corrupting the integrity of their analysis.

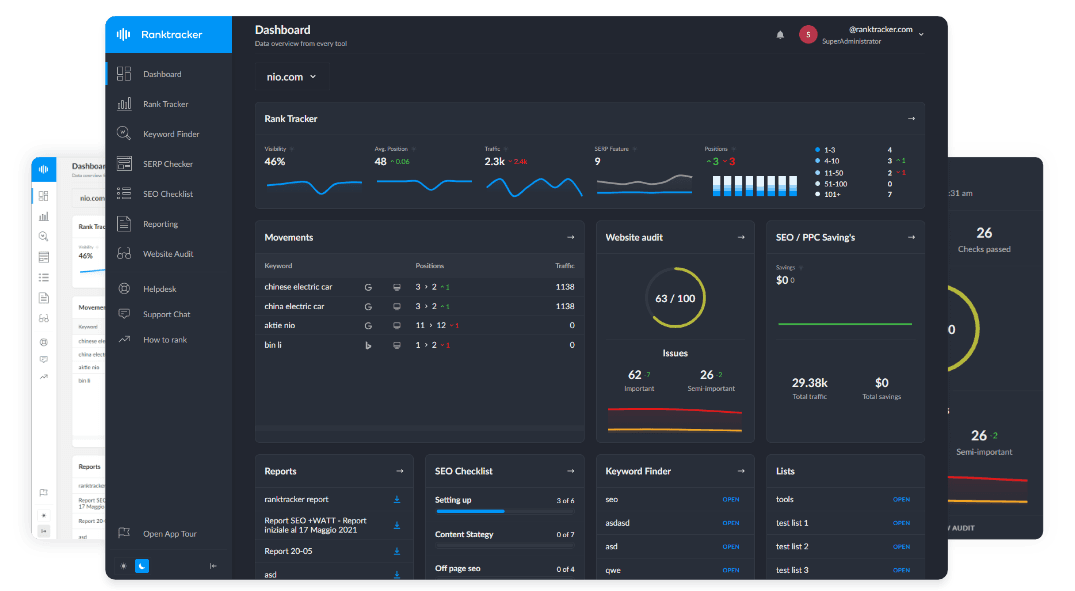

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

In financial sectors, analysts scrape trading data, earnings reports, and sentiment scores from investor forums. A free proxy list might offer temporary access, but gaps in availability and inconsistent response times can interrupt time-sensitive workflows. Rotating proxies with session persistence are often necessary when accessing login-gated or JavaScript-heavy platforms.

Recruiting and HR platforms use scraping to gather job postings across hundreds of portals. By routing requests through selected IPs from the best proxies for scraping, they avoid CAPTCHAs and login walls, allowing job boards to aggregate opportunities without direct partnerships.

Academic research initiatives rely on large-scale web data collection for studies on misinformation, content moderation, and regional content access. A free proxy list can assist with quick sampling or pilot testing, but for sustained access across time zones and languages, more reliable proxies are necessary.

Digital marketers turn to proxy infrastructure for SEO audits, rank tracking, and ad verification. These tasks require geographically targeted access that free lists can rarely support due to static or mislabeled IP geolocations. The best proxies for scraping include options to simulate device types and browser headers, ensuring data is collected under realistic browsing conditions.

Limitations and Workarounds in Proxy-Based Data Collection

Despite their appeal, proxies from a free proxy list present several limitations. The most pressing issue is reliability. Free proxies are often used by many users simultaneously, which increases the likelihood of bans or connection drops. This inconsistency makes them unsuitable for tasks that require uptime or sequential access to paginated data.

Security risks also emerge. Some free proxies intercept unencrypted traffic or inject ads into returned content. Using these proxies without proper SSL handling may expose data to unauthorized monitoring or manipulation. As a result, experienced users restrict such proxies to non-sensitive tasks or route them through containerized environments.

Another concern is rotation logic. Effective scraping requires managing IP sessions over time to simulate natural behavior. Static proxies from a free list often lack session control or change unpredictably. This results in broken scraping scripts, lost progress, or duplicated requests.

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

Rate limits imposed by websites pose further challenges. High-frequency access from a single IP-even from a free proxy-can trigger server-side throttling or IP blacklisting. Proxies without header customization, delay intervals, or retry logic become quickly ineffective.

Some free proxy lists include proxies that are geographically misrepresented or outdated. This misalignment affects tasks like geolocation-specific ad testing or multi-region content validation. Accuracy matters when the objective is to reflect user behavior from specific cities, carriers, or browsing habits.

To address these issues, users often blend free access with more structured infrastructure. Proxy testing tools, logging systems, and error handling frameworks are integrated to detect when a proxy fails or delivers inconsistent results. This allows for quick replacement without halting the entire operation.

Proxy Evolution and Industry-Wide Forecasts

The proxy ecosystem is evolving in response to both user demands and web defense mechanisms. By 2026, it is expected that over 70% of data scraping activity will rely on proxy pools governed by machine learning algorithms. These systems adjust IP rotation, timing, and behavior simulation based on website feedback, improving stealth and success rates.

Free proxy lists are expected to become less central in large-scale operations but may remain valuable for educational purposes, prototyping, or regional sampling. Developers continue to use these lists to test logic, verify scripts, or conduct basic penetration audits in controlled environments.

There is growing interest in modular proxy architecture. Users now design scraping systems that can switch between residential, datacenter, and mobile proxies depending on target site behavior. Rotating residential proxies are commonly used for reliability, while static proxies from a free proxy list can function as fallback options or as a secondary layer when primary systems fail. Static proxies from a free proxy list can function as fallback options or as a secondary layer when primary systems fail.

Geographic diversity remains a major theme. Demand for proxies based in Southeast Asia, Africa, and South America is increasing, especially among companies expanding global operations. However, availability in these regions remains limited on free proxy lists, reinforcing the need for curated and dynamically sourced IP pools.

Data privacy regulations may shape how proxy systems are built. With laws like GDPR and CPRA tightening control over network identity and digital access, proxy services-whether free or paid-must incorporate consent tracking and route transparency. Proxies used for scraping will need audit trails and logging to maintain compliance.

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

Another trend involves proxy use in AI training. As machine learning systems require diverse data inputs from across the web, proxies enable ethical and distributed data gathering. Free proxies may serve as an entry point for hobbyist model builders, but scaled operations will lean toward specialized proxy solutions that manage traffic volume and source verification.

Evaluating Proxy Options with Strategic Planning

When reviewing a free proxy list, it’s tempting to see it as a quick solution. But if your goal is sustained data access, the best proxies for scraping will align with your workload, location needs, and request volume. Free proxies may support temporary research or small experiments but tend to fall short under continuous load or advanced security environments.

What matters most is not where the proxy comes from, but how it fits into your broader architecture. Are you targeting a dynamic site with rate limits? Do you need to preserve sessions across several steps? Is geolocation accuracy critical? These questions guide the structure of your proxy strategy.

While there is no universal blueprint, proxy users who combine monitoring, failover logic, and scalable infrastructure tend to succeed. Whether working from a free proxy list or building a pool from scratch, the key lies in control, flexibility, and clear limitations of each option.

The most consistent performance comes from proxies selected with intent-not simply availability. With traffic detection methods advancing, scraping success will depend on more than a working IP. The quality, history, and behavior of that IP will matter more than ever before. This makes strategic planning an essential part of selecting the best proxies for scraping while managing risk, performance, and scale.