Intro

You've likely heard the term "Google Crawler" or "Googlebot" thrown around in discussions about search engine optimization (SEO) and website visibility. But what exactly is Google Crawler, and why is it essential for your website's indexing and visibility in search results?

Understanding how search engines work is critical to successful digital marketing and advertising strategies. This complex process involves algorithms, web crawlers, indexing, machine learning, and more.

In this article, we will demystify the workings of Google Crawler and explore how search engine bots navigate and index websites. We'll delve into the three stages of Google Search: crawling, indexing, and serving search results, and give you some actions you can take to ensure your website is indexed correctly and visible to potential customers.

Understanding Google Crawler

![]() (Source: Google)

(Source: Google)

The Google Crawler, or Googlebot, is an automated explorer that tirelessly scans websites and indexes their content.

What is a web crawler?

A web crawler, spiders, and bots are computer-generated programs that look for and collect web data. Web Crawlers have many functions like indexing websites, monitoring website changes, and collecting data from databases. Google bots and other engines are Google's crawlers.

What Is The Googlebot?

Google uses different tools (crawlers and fetchers) to gather information about the web. Crawlers automatically discover and scan websites by following links from one page to another. The central crawler used by Google is called Googlebot. It's like a digital explorer that visits web pages and gathers information. Fetchers, however, are tools that act like web browsers. They request a single webpage when prompted by a user.

Google has different types of crawlers and fetchers for various purposes. For example, there's Googlebot Smartphone, which crawls and analyzes websites from a mobile perspective, and Googlebot Desktop, which does the same for desktop websites.

Crawlers are responsible for building Google's search indices and analyzing websites for better search results. They follow guidelines specified in the robots.txt file to respect website owners' preferences. Google also uses fetchers for specific tasks, such as crawling images or videos and even fetching content upon user request.

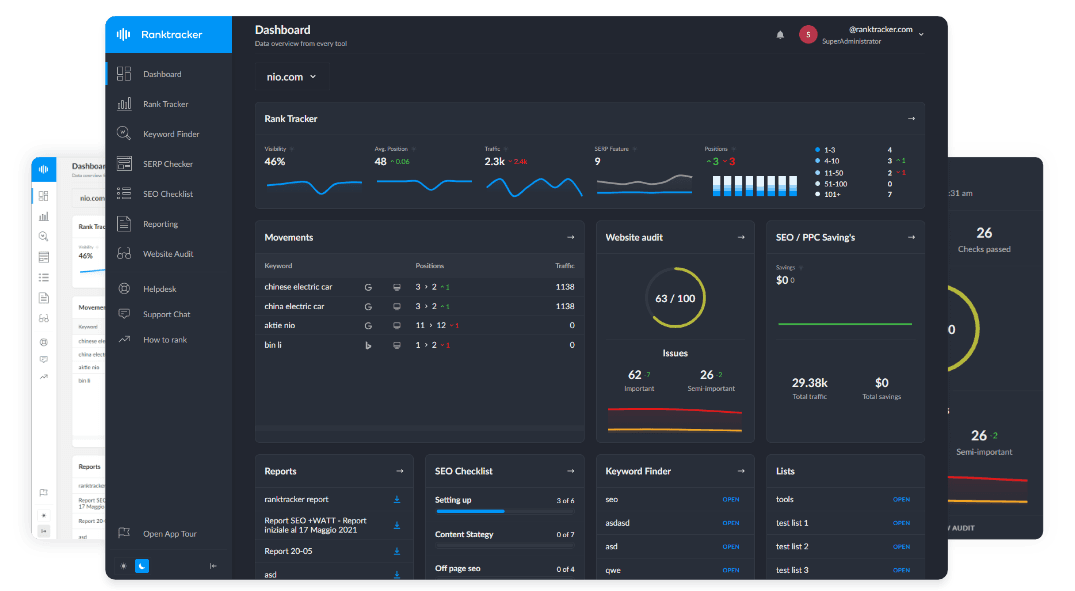

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

So, when you optimize your website for search engines, you're essentially making it more inviting and accessible to these friendly digital explorers. It's like creating a clear pathway for them to understand and index your website effectively.

How The Google Crawler Works

![]()

How does Google discover and organize the vast amount of information available on the internet? The Google Crawler has three essential stages: crawling, indexing, and serving search results.

Crawling

How exactly does Googlebot discover new pages?

When Googlebot visits a page, it follows the embedded links, leading it to new destinations. Additionally, website owners can submit a sitemap, a list of pages they want Google to crawl. This helps the crawler find and include those pages in its index.

The Googlebot utilizes a sophisticated algorithmic process to determine which sites to crawl, how often to crawl them, and how many pages to fetch from each site. The crawling process is designed to respect websites and aims to avoid overloading them by crawling at an appropriate speed and frequency.

Various factors can influence the crawling speed. The responsiveness of the server hosting the website is crucial. If the server experiences issues or is slow in responding to requests, it can impact how quickly Googlebot can crawl the site. Network connectivity also plays a role. If there are network-related problems between Google's crawlers and the website, it may affect the crawling speed.

Furthermore, website owners can specify crawl rate preferences in Google's Search Console, which allows them to indicate the desired frequency at which Googlebot should crawl their site.

Indexing

Indexing analyzes content and stores that information in Google's vast database, the Google Index. But what exactly happens during indexing?

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

Google analyzes various aspects of the web page's content, including the text, images, videos, key content tags, and attributes like title elements and alt attributes. It examines the page to understand its relevance and determine how it should be categorized within the index. During this analysis, Google also identifies duplicate pages or alternate versions of the same content, such as mobile-friendly versions or different language variations.

While indexing is generally reliable, there can be issues that website owners may encounter. For example, low-quality content or poorly designed websites can hinder indexing. Ensuring that web page content is high quality, relevant, and well-structured is crucial for proper indexing.

Another common issue is when the robots.txt file prevents indexing. Website owners can utilize the robots.txt file to specify instructions to search engines on crawling and indexing their sites. However, if pages are blocked, they will not appear in the search results. Regularly reviewing and updating the robots.txt file can help overcome this issue.

Serving Search Results

Once Google has crawled and indexed web pages, the final stage is serving search results. This is where the magic happens, as Google uses complex algorithms to determine the most relevant and high-quality results for each user's search query. So, how does Google rank and serve search results?

Relevancy is a critical factor in determining search results. Google considers hundreds of factors to assess the relevance of web pages to a user's query. These factors include the content of the web page, its metadata, user signals, and the overall reputation and authority of the website. Google's algorithms analyze these factors to understand which pages will likely provide the best answer to the user's search intent.

It's important to note that the search results can vary based on several factors, such as the user's location, language, and device (desktop or mobile). For example, searching "bicycle repair shops" in Paris may yield different results than the search in Hong Kong.

Google also considers the specific search features relevant to a user's query. These features can include local results, images, videos, knowledge panels, and more. The presence of these features in search results depends on the nature of the search query and its intent.

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

The user's query plays a crucial role in shaping the search results. Google aims to understand the user's search intent and provide the most helpful information. By analyzing the query, Google can deliver results that align with the user's needs and preferences.

Best Practices for Google Crawler Optimization

Optimizing your website for the Google crawler is critical to discovering, indexing, and ranking your web pages in Google's search results. By implementing best practices for Google Crawler Optimization, you can enhance your website's visibility and improve its chances of attracting organic traffic.

Technical Optimization

- Optimize Site Structure: Create a logical and organized hierarchy of pages using clear and descriptive URLs, organize your content into relevant categories and subcategories, and implement internal linking to establish a coherent website structure.

- Robots.txt File: The robots.txt file instructs search engine crawlers on which pages to crawl and which to exclude. By properly configuring your robots.txt file, you can control the crawler's access to certain parts of your website, prioritize the crawling of essential pages, and prevent the crawling of duplicate or sensitive content.

- Canonical Attributes: Handling duplicate content and URL parameters is crucial for technical optimization. It's essential to implement canonical tags or use the rel="canonical" attribute to specify the preferred version of a page and avoid potential duplicate content issues.

- XML Sitemap: Generate and submit your XML sitemap to Google Search Console. The XML sitemap helps the crawler discover and index all your web pages efficiently and prioritize specific pages you want to be indexed.

By implementing these technical optimization best practices, you can enhance the accessibility of your website for the Google crawler, improve the indexation of your pages, and prevent potential issues related to duplicate content and URL parameters. This, in turn, contributes to better visibility and rankings in search results, ultimately driving more organic traffic to your website.

Content Optimization

Content optimization plays a vital role in maximizing your site visibility. High-quality content with a clear structure incorporating keywords, meta tags, and image attributes helps Google understand your content and improves the chances of ranking your web pages.

- Post Structure: Pages should be written clearly for better readability and comprehension. An SEO-friendly post structure typically starts with the H1 tag, followed by H2 tags and other subheadings in descending order of importance: H3, H4, etc.

- Keywords: Use primary and secondary keywords throughout the post and match the search intent. Incorporate keywords naturally and strategically throughout your content, in headings, subheadings, and within the body text.

- Meta Tags: Use the primary keyword in your title tag and meta description. The meta description should entice readers to click on the link.

- Image Optimization: use descriptive file names, alt tags, and title attributes.

![]()

Use tools like Rank Trackers Website Audit Tool to identify technical SEO issues and the SEO Checklist to optimize your content. Google Search Console is a powerful and free tool to uncover how the Google Crawler sees your website. Leverage it to take your search engine optimization efforts to the next level.

Google Search Console

![]()

Google Search Console is a powerful tool that allows website owners to monitor and optimize their website's performance in Google Search. Here's how you can use Google Search Console in conjunction with the Google Crawler:

- Submit Your Sitemap: A sitemap is a file that lists all the pages on your website, helping the crawler discover and index your content more efficiently. With Google Search Console, you can submit your sitemap, ensuring that all your important pages are crawled and indexed.

- Monitoring Crawl Errors: Google Search Console provides a detailed report of crawl errors, pages it couldn't access, or URLs that returned errors. Regularly monitor GSC for errors so the crawler can correctly index your site.

- Fetch as Google: The Fetch as Google allows you to see how the Google Crawler renders your site. This feature helps you identify issues that affect how the crawler views your content.

- URL Inspection Tool: Analyze how a specific URL on your site is indexed and appears in the search results. It shows information about crawling, indexing, and any issues found.

- Search Performance: Google Search Console has detailed data on how your website performs in Google Search, including impressions, clicks, and average position. Gain insights into the keywords and pages driving traffic to your site. This information helps you align your content strategy with user intent and optimize your pages to improve their visibility in search results.

Conclusion

Google Crawler is a cornerstone to understanding how your website is indexed and shown in Google's search results. Knowing how it works will help you optimize your content for search engine visibility and improve your website performance and is a corner stone to any digital marketing strategy.

Remember, optimizing for the Google Crawler is an ongoing process that requires continuous evaluation, adaptation, and commitment to providing the best user experience.

FAQ

What is the Google bot?

The Googlebot is the web crawler used by Google to discover and index web pages. It constantly visits websites, follows links, and gathers information for the search engine's indexing process. As part of its mission, it helps Google understand what content exists on a website so that it can be indexed appropriately and delivered in relevant search results/

How does the Google bot work?

The Googlebot visits webpages, follows links on those pages, and gathers information about their content. It then stores this information in its index, which delivers relevant search results when users submit a query.

Does Google have a bot?

Yes, Google uses a web crawler called the Googlebot to discover and index websites. The Googlebot is constantly running to keep Google's search engine up-to-date with new content and changes on existing sites.

What is a Googlebot visit?

A Googlebot visit is when it crawls a website and gathers information about its content. The Googlebot visits websites regularly, although the frequency of visits can vary depending on certain factors, such as how often pages are updated or how many other websites link to them.

How Often Does Google Crawl My Site?

The average crawl time can be anywhere from 3 days to 4 weeks, though this frequency can vary. Factors such as page updates, the number of other websites linking to yours, and how frequently you submit sitemaps can all affect your website's crawl rate.